TIBCO Scribe® Online Connector for Google BigQuery

The TIBCO Scribe® Online Connector for Google BigQuery allows you to integrate your Google BigQuery databases easily with other business systems such as ERP, CRM, and Marketing Automation. This Connector discovers tables, views, and columns. In addition, you can use native SQL queries to access, filter, and join your data.

Use the Google BigQuery Connector as a source or target Connection for On schedule or On event apps. This Connector is based on the Scribe.Connector.AdoNet library and CData Google BigQuery ADO.NET provider.

Use the Connector for Google BigQuery to:

- Integrate with any application across your business that uses Google BigQuery as a back end

- Move Google BigQuery data to other systems

Connector Specifications

| Supported | |

|---|---|

|

Agent Types |

|

| Connect on-premise | X |

| Connect cloud | X |

|

Data Replication Apps |

|

| Source | |

| Target | |

|

On Schedule Apps |

|

| Source | X |

| Target | X |

|

On Event Apps |

|

| Source | X |

| Target | X |

|

Flows |

|

| Integration | X |

| Request-Reply | X |

| Message | |

This Connector is available from the TIBCO Cloud™ Integration Marketplace. See Marketplace Connectors for more information.

Supported Entities

- Tables and Views from the Google BigQuery database are exposed as entities.

- Views support only Query.

- Updateable Views support Query, Create, Update, and Delete.

Setup Considerations

- Supports Google BigQuery versions 1, 2, and 3.

Advanced considerations, such as customizing the SSL configuration, or connecting through a firewall or a proxy, are described in the Advanced Settings section of the CData documentation.

API Usage Limits

Google BigQuery limits the number of API calls per project per day and the number of queries per second per IP address. See Quotas and limits in the Google BigQuery documentation for exact limits.

Selecting an Agent Type for Google BigQuery

Refer to TIBCO Cloud™ Integration - Connect Agents for information on available agent types and how to select the best agent for your app.

Connecting to Google BigQuery

- Select Connections from the menu.

- From the Connections page, select Create

to open the Create a connection dialog.

to open the Create a connection dialog. - Select the Connector from

the list to open the Connection dialog, and then enter the following information for this Connection:

- Name — This can be any meaningful name, up to 25 characters.

- Alias — An alias for this Connection name. The alias is generated from the Connection name, and can be up to 25 characters. The Connection alias can include letters, numbers, and underscores. Spaces and special characters are not accepted. You can change the alias. For more information, see Connection Alias.

- ProjectId - Id of the project where you want to connect. ProjectId of the billing project for executing jobs. Obtain the project Id in the Google API console: In the main menu, select API Project and copy the Id.

- DataSetId - Dataset where you want to connect and view tables.

- OAuth Verifier Code - Unique authorization code returned by Google for your account when you click the Get Verifier Code button. After saving the Connection, this code no longer displays. TIBCO Cloud™ Integration - Connect stores it for use when connecting to Google BigQuery.

- Additional Parameters - Optional field where you can specify one or more connection string parameters. See the Connection String Options section of the CData documentation for a list of parameters that can be used and their default values. Note that in some cases the CData PostgreSQL ADO.NET provider does not fully support all of the possible parameters.

Syntax for the Additional Parameters field is as follows:

- Schema name is required for tables and views in the format schemaname.tablename or schemaname.viewname. For example: Tables=scribe.mhs_test;Views=scribe.mhs_list;Timeout=300;

- All blank characters, except those within a value or within quotation marks, are ignored

- Preceding and trailing spaces are ignored unless enclosed in single or double quotes, such as Keyword=" value"

- Semicolons (;) within a value must be delimited by quotation marks

- Use a single quote (') if the value begins with a double quote (")

- Use a double quote (") if the value begins with a single quote (')

- Parameters are case-insensitive

- If a KEYWORD=VALUE pair occurs more than once in the connection string, the value associated with the last occurrence is used

- If a keyword contains an equal sign (=), it must be preceded by an additional equal sign to indicate that the equal sign is part of the keyword

- Parameters that are handled by other fields or default settings in the Connection dialog are ignored if used in the Additional Parameters field, including:

- Logfile - To enable logging enter a value for the verbosity parameter in the Additional Parameters field. The default log file size is a maximum of 10MB. When the log file reaches 10MB a new log file is started, up to a maximum of five files. Once there are five files, the oldest file is deleted as needed. Any CData log files generated by this setting are stored in the default Connect on-premise agent Logs directory, C:\Program Files (x86)\Scribe Software\TIBCO Scribe® Online Agent\logs\.

- MaxLogFileCount - This parameter is set by the Connector to a maximum of five files.

- MaxLogFileSize - This parameter is set by the Connector to a maximum of 10MB.

- Other

- RTK

- UseConnectionPooling

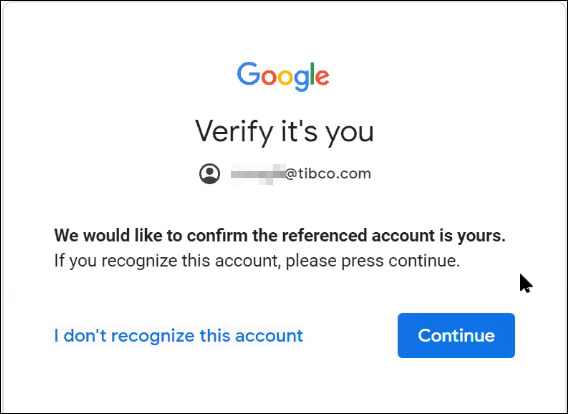

- Click the Get Verifier Code button and in the new browser tab log into your Google Platform account. If this is the first time you are accessing your account from the Connection dialog, an account confirmation message displays. Click Continue.

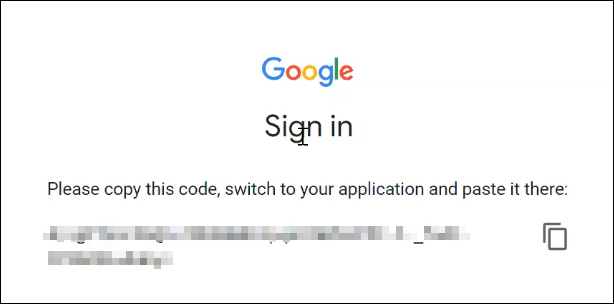

- When the Verifier Code displays, copy it and paste the code into the OAuth Verifier Code field on the Connection dialog. Note: The Verifier Code expires quickly. If you test your Connection and it fails, it may be because the code has expired. Click the Get Verifier Code button again and quickly copy and paste the new code.

After you configure and save this Connection, the Verifier Code no longer displays on the Connection dialog. TIBCO Cloud™ Integration - Connect stores it for use when connecting to Google BigQuery.

- Select Test to ensure that the agent can connect to your database. Be sure to test the Connection against all agents that use this Connection. See Testing Connections.

- Select OK/Save to save the Connection.

Metadata Notes

Consider the following for Google BigQuery data fields and entity types.

- Object definitions are dynamically generated based on the table definitions within Google BigQuery for the Project and Dataset that are specified in the connection.

- The Execute Block displays on the flow designer, however, using Stored Procedures to generate metadata is not supported.

- The BIGNUMERIC datatype is not supported. If this datatype is in use, TIBCO Cloud™ Integration - Connect cannot return any metadata for this connection. If you are using BIGNUMERIC datatypes in your data, you have the following options:

- Modify the datatype for all fields that are type BIGNUMERIC.

- Use the Additional Parameters field on the Connection dialog to specify the Tables or Views that do not contain any fields that are datatype BIGNUMERIC. Metadata is retrieved only for the selected Tables. See Connection Strings in the CData documentation.

Naming

Connection metadata must have unique entity, relationship, and field names. If your Connection metadata has duplicate names, review the source system to determine if the duplicates can be renamed.

Retry Logic

If a request fails, the Connector does not retry.

Google BigQuery Connector as an App Source

Consider the following when using the Google BigQuery Connector as an app source.

- Hierarchical data is not supported.

- Relationships are not supported.

Native Query

The Google BigQuery Connector supports SQL queries in Native Query Blocks to create customized queries for Google BigQuery. The query can be as simple or complex as you need it to be; however, it should return a single result set. The native query text is sent to Google BigQuery exactly as it is entered without any modifications.

You can use SELECT , UPDATE , INSERT and DELETE clauses. If support for Enhanced SQL is enabled, you can use Joins, Aggregate functions, Projection Functions, and Predicate Functions. For additional details, see the SQL Compliance section of the CData documentation.

After entering the SQL query, you must select Test to validate the query. Invalid queries are not accepted by the Connector. See Native Query Block and Creating Native Queries For Microsoft SQL Server for additional information.

When testing a Native Query in a flow, if the source datastore does not return any data, TIBCO Cloud™ Integration - Connect cannot build the schema for the underlying metadata and the flow cannot be saved. To allow TIBCO Cloud™ Integration - Connect to build the schema, do the following:

- Create a single temporary record in the source datastore that matches the Native Query.

- Test the Native Query and ensure that it is successful.

- Save the flow.

- Remove the temporary record from the source datastore.

Net Change

When a datetime is configured on the Query block on the Block Properties Net Change Tab to query for new and updated records, that configuration is treated as an additional filter. The Net Change datetime filter is applied as an AND after any other filters specified on the Block Properties Filter Tab. TIBCO Cloud™ Integration - Connect builds a query combining both the Net Change filter and the filters on the Filter tab. See Net Change And Filters for an example.

Some Connectors for TIBCO Cloud™ Integration - Connect only support one filter. For those Connectors you can use either Net Change or one filter on the Filter tab, not both.

Filtering

- Filtering support varies by entity. For additional details, see the Data Model section of the CData documentation.

- Net Change is not supported.

Google BigQuery Connector as an App Target

Consider the following when using the Google BigQuery Connector as an app target.

- Batch processing is not supported.

- Delete and Update operations generate record errors if more than one record matches the Matching Criteria.

- There is a 30 minute delay between when you create a record and when the Google BigQuery API allows you to update or delete the created record. Attempting to update or delete a new record before the 30 minute delay has elapsed generates errors similar to the following:

Operation failed. Label: Update ZMTestTable1, Name: ZMTestTable1Update, Message: [500] Could not execute the specified command: [400] UPDATE or DELETE statement over table ZMTest.Table1 would affect rows in the streaming buffer, which is not supported

Best practice is to update or delete new records using a separate app that runs later.

TIBCO Cloud™ Integration - Connect API Considerations

License Agreement

The TIBCO End-User License Agreement for the Google BigQuery Connector describes TIBCO and your legal obligations and requirements. TIBCO suggests that you read the End-User License Agreement.

More Information

For additional information on this Connector, refer to the Knowledge Base and Discussions in the TIBCO Community.